About¶

Maggot, a research data cataloging tool¶

Background¶

Within collectives (units, platforms, large projects, etc.) there are challenges of organizing, documenting, storing, and sharing data in order to have visibility on what is produced within these collectives: data sets, software, databases, images, sounds, videos, analyses, codes, etc.

Main objectives¶

Data sharing still arouses a great deal of reluctance, sometimes justified, but often masking a lack of will. The main objective is therefore to start by creating a culture of sharing metadata, which describes relevant information about the data by giving it more context for users, and which is therefore less sensitive than the data. This is also and above all one of the prerequisites for open science.

The first step is to promote good data management, while keeping metadata sharing in mind. One of the main concerns has focused on how to "capture" descriptive metadata as easily as possible by mobilizing users' vocabulary, then structuring it for 1) transparency and reproducibility, 2) reuse. Regarding the latter point, there is the aim of one day being able to conduct large-scale meta-analyses. This is why metadata is crucial, but not only that.

Methodology¶

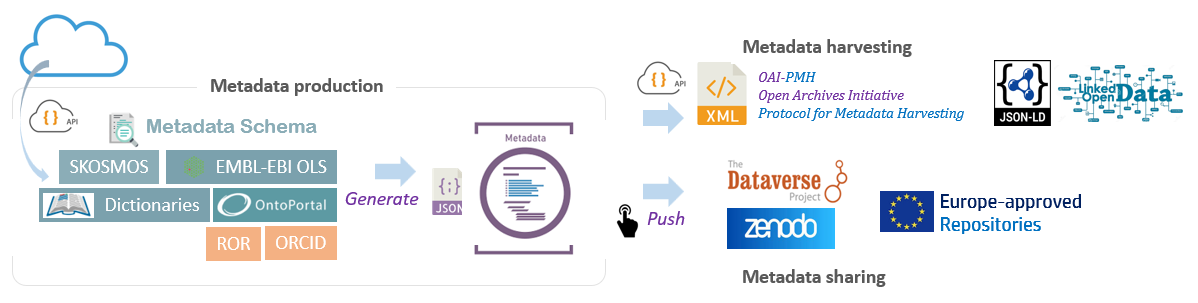

Since the organization of metadata must follow a schema (i.e., indicating which metadata are expected), the one proposed is based on the Dataverse Project used by many scientific institutions (e.g. the French institutional data warehouse, namely Recherche Data Gouv). This is the default schema that we recommend using. It is nevertheless possible to adapt it.

Concerning semantics, we recommend a gradual approach towards the adoption of standardized controlled vocabularies. For example, a simple dictionary of business vocabulary used by the community in the scientific field concerned may be perfectly sufficient. Subsequently, the creation of a thesaurus with or without correspondence with existing ontologies can be considered or, better still, enriching existing ones (e.g., Thesaurus-INRAE). Obviously, when they exist, the ontologies of the domain can be chosen progressively by selecting those which are relevant for the collective and by drawing up a comprehensible landscape of the context in which they are included.

The metadata thus produced are used to feed a local data warehouse intended to be subsequently consulted by all the people in the collective. Hence the importance of both the metadata schema and the vocabulary repositories specific to each collective. But these same metadata can also feed other warehouses, namely Dataverse and Zenodo. Similarly, these metadata must be able to be harvested in order to carry out meta-analyses. It is therefore necessary to set up gateways between the different formats.

Tools and technologies¶

The inventory of online vocabularies allows us to identify three main types of resources: 1) ontology portals based on OntoPortal – such as AgroPortal and BioPortal, 2) thesauri based on SKOSMOS - Thesaurus-INRAE, LOTERRE, etc. and 3) ontology portals based on the EMBL-EBI ontology search service i.e. Ontology Lookup Service. These three types of resources are supported in order to maximize relevance in the choice of vocabularies. Since all these vocabularies are made available on the Internet, and given the volumes of data that this represents, the option chosen is to retrieve them on demand using a connector on these portals – via APIs (Application Program Interface).

Other additional but useful resources are also used (via their API) in order to minimize entries and therefore identification errors, but also to avoid duplicates. Examples include the international register of institutions (Research Organization Registry), or the register of persistent identifications for people (ORCID).

Regarding metadata harvesting, which consists of collecting metadata via an API in order to store it on another platform, the choice fell on the standard OAI-PMH protocol (Open Archives Initiative - Protocol for Metadata Harvesting]) based on the DublinCore metadata schema. Similarly, metadata can also be harvested in JSON-LD format based on the schema.org metadata schema. In both cases, gateways - metadata crosswalk - were set up.

Locks and levers¶

Data management is still far from being a major concern in collectives, even when it is stated as an objective to be achieved within the framework of a data policy, often included in a quality approach. This is why it is often necessary to emphasize that "Open Data" does not mean "Open Bar" Metadata alone may very well be sufficient, provided that the conditions under which the data is accessible are specified. It is therefore urgent to develop a culture of metadata sharing.

Furthermore, in response to the time dedicated to this task, tools that simplify data entry as much as possible are needed. For example, it is easier to select a term from a drop-down list based on professional vocabulary than to choose from a multitude of ontologies. It is therefore not surprising that the greatest difficulty encountered by the various sites was undoubtedly the choice of a controlled vocabulary repository. Indeed, it is common for one not to be able to find all the desired terms to describe a metadata item within a thesaurus, an ontology, or even a set of ontologies. In particular, many business vocabularies are absent from these repositories. The solution in these cases consists of creating a dictionary grouping all the desired terms by mixing those from one or more ontologies, those from thesauri, as well as the business vocabularies. This constitutes a sort of new mini-thesaurus. Therefore, a data manager is required who, upstream, does this compilation work and thus constitutes a controlled vocabulary repository, evolving over time, as part of a process of continuous improvement.

Enabling producers to obtain metadata that better respects the FAIR principles, without them always being fully aware of this, was also one of the objectives, achieved by distributing the concerns between the different actors (data managers, data producers, data curators) according to their skills and responsibilities.

Perspectives¶

Since the "capture" of metadata is crucial, it should ideally be done uniquely and at the most relevant stage of the data lifecycle for each of them (e.g., the name of a project at the time of its submission to funders). On the other hand, each entry can be a source of error, confusion, or duplication. This is why it is necessary to reinforce the tendency to interconnect the different online resources within an information flow following a logic of stages. The description of the project, the participants, with general keywords (ANR type resources), then comes the data management plan (DMP) where we describe the types of data, the licenses, etc. (e.g., DMP Opidor) then the descriptive metadata specific to each project (e.g., Maggot), and finally the structural metadata of each dataset (e.g., ODAM), until the final dissemination (e.g., Dataverse). Consequently, at each stage, we must retrieve the metadata upstream and then transmit them downstream with added value specific to each stage. This is called “machine actionable” i.e., exploitability by the machine.

Links¶

- Source code on Github : inrae/pgd-mmdt

- Issues tracker : inrae/pgd-mmdt/issues

- Instance online : INRAE UMR 1322 BFP

Publication¶

- Daniel Jacob, François Ehrenmann, Romain David, Joseph Tran, Cathleen Mirande-Ney, Philippe Chaumeil, An ecosystem for producing and sharing metadata within the web of FAIR Data, GigaScience, Volume 14, 2025, giae111, DOI:10.1093/gigascience/giae111

Related articles¶

- Usages d'AgroPortal dans des systèmes d'information à INRAE - hal-05077024

Contacts¶

- Daniel Jacob (INRAE UR BIA-BIBS - 0000-0002-6687-7169)

Designers / Developers¶

-

Daniel Jacob (INRAE UR BIA-BIBS)

-

François Ehrenmann (INRAE UMR BioGECO)

-

Philippe Chaumeil (INRAE UMR BioGECO)

Contributors¶

-

- Julien Paul (AGAP institute)

-

- Romain David

-

- Amélie Masson, Sandrine Mouret, Sébastien Perrin

License¶

- GNU GENERAL PUBLIC LICENSE Version 3, 29 June 2007 - GPLv3